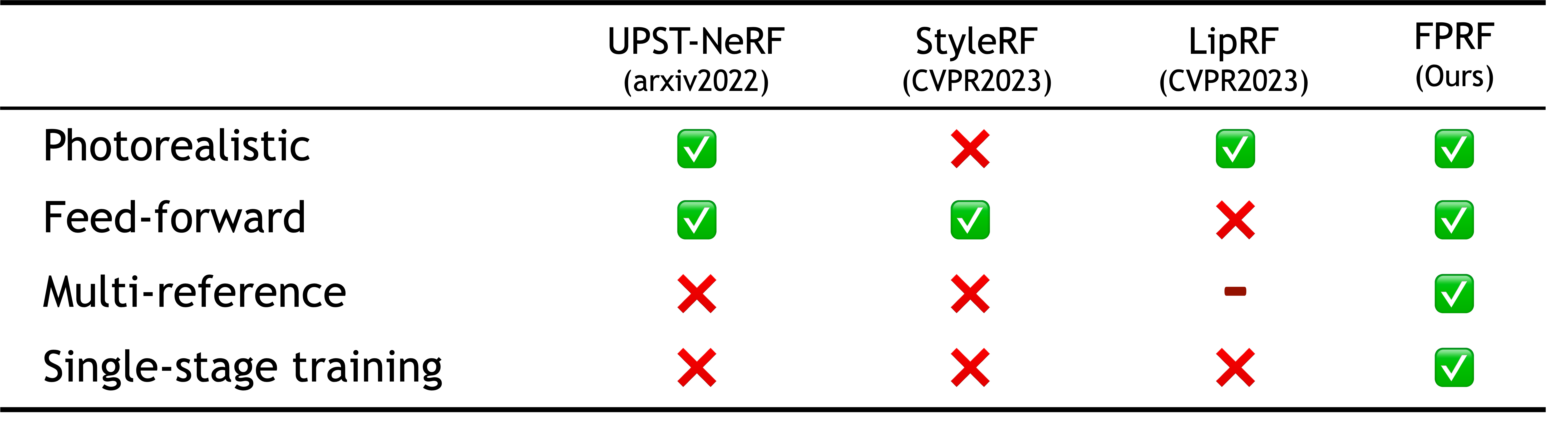

Abstract

We present FPRF, a feed-forward photorealistic style transfer method for large-scale 3D neural radiance fields. FPRF stylizes large-scale 3D scenes with arbitrary, multiple style reference images without additional optimization while preserving multi-view appearance consistency. Prior arts required tedious per-style/-scene optimization and were limited to small-scale 3D scenes. FPRF efficiently stylizes large-scale 3D scenes by introducing a style-decomposed 3D neural radiance field, which inherits AdaIN’s feed-forward stylization machinery, supporting arbitrary style reference images.

Furthermore, FPRF supports multi-reference stylization with the semantic correspondence matching and local AdaIN, which adds diverse user control for 3D scene styles. FPRF also preserves multi-view consistency by applying semantic matching and style transfer processes directly onto queried features in 3D space. In experiments, we demonstrate that FPRF achieves favorable photorealistic quality 3D scene stylization for large-scale scenes with diverse reference images.

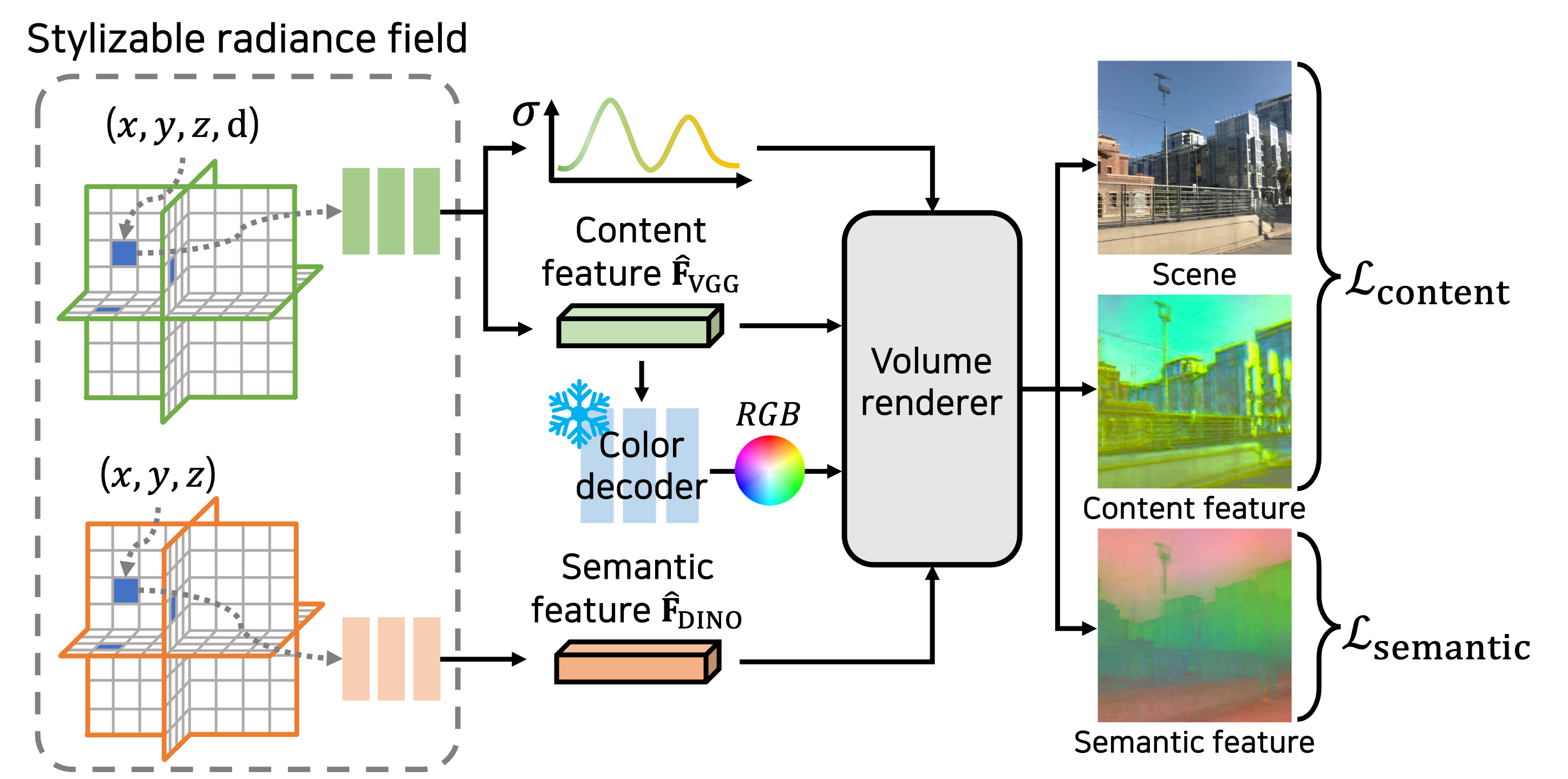

Training 3D Dual Feature Fields

Given a set of scene images and corresponding VGG and DINO features, FPRF learns a scene content field and a scene semantic field via neural feature distillation. The scene content field is trained with the pre-trained color decoder being compatible with AdaIN, which allows feed-forward photorealistic style transfer with an efficient single-stage training.

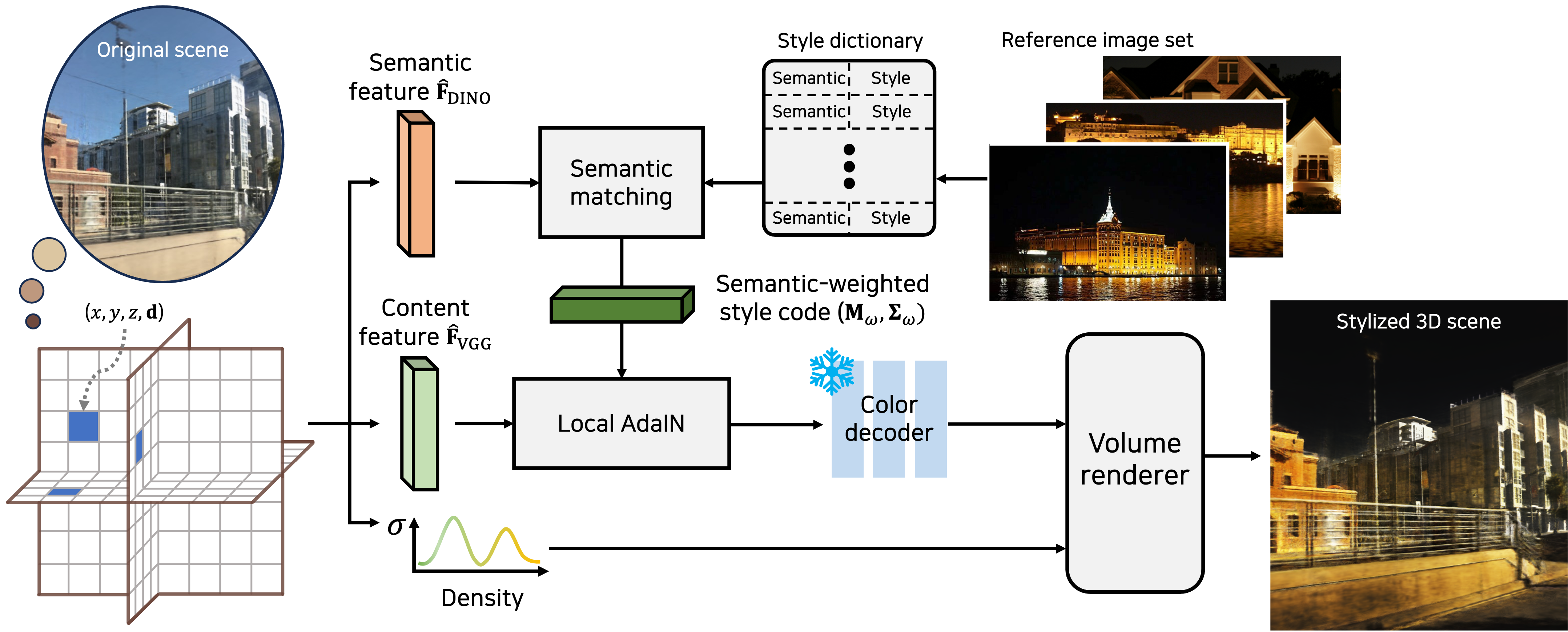

Semantic Matching & Local AdaIN

We stylize the large-scale 3D scene with a set of arbitrary reference images, via semantic matching and local AdaIN. We compose a style dictionary consisting of local semantic/style code pairs extracted from the clustered reference images. Using the style dictionary and the semantic features from the scene, we find semantic correspondence between the refernece images and the scene. With the semantic correspondence, we construct semantic-weighted style code and perform local AdaIN for semantic-aware style transfer in a feed-forward manner.